Key Takeaway

Centralize control of how AI agents talk and get resources so the platform can adapt messaging, batching, and routing in real time—raising throughput and meeting latency goals without changing each agent.

ON THIS PAGE

Key Findings

A controller that watches runtime metrics and can change communication granularity (from token-by-token to batched) plus agent-level knobs can improve multi-agent pipeline performance dramatically. A three-part stack—fine-grained message handling, lightweight telemetry, and a central controller—lets operators express high-level goals and have the system auto-tune itself. Simple, standardized agent hooks (a small shim with two calls) let the controller change agent behavior at runtime, avoiding bespoke code in each workflow. A prototype showed large throughput gains when the controller changed messaging strategy and when it also exercised deeper control over agents. Supervisor Pattern

Not sure where to start?Get personalized recommendations

Data Highlights

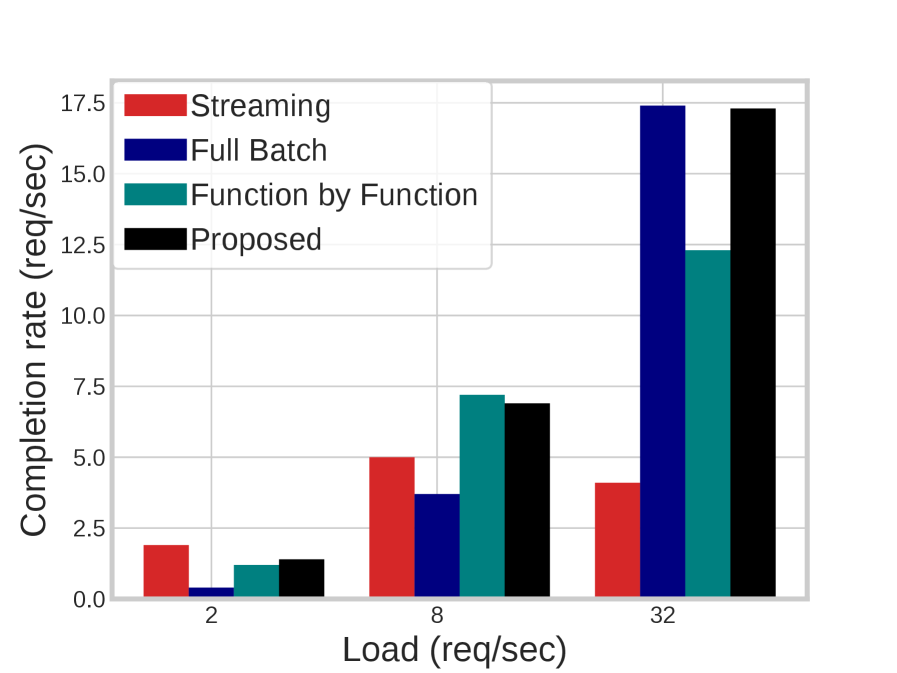

1Up to 3.6× improvement in serving throughput by switching communication granularity (fine-grained control over batching/streaming).

2An additional up to 2.3× throughput improvement when the controller also exercised deeper serving controls (e.g., routing, model selection, resource allocation).

3Controller-agent interface is tiny: agents expose two standard functions (set and reset); example parameter set(‘max_num_seqs’, 4) sets batch size to 4.

What This Means

Platform and infrastructure engineers building multi-agent AI pipelines who need predictable latency and higher throughput without rewriting agents. Product and ML engineers running complex agent chains (e.g., code generation + testing) can use these controls to trade responsiveness for throughput automatically. Researchers and tool builders interested in agent governance and runtime evaluation can leverage the controller to implement and test policies consistently. Consensus-Based Decision Pattern

Key Figures

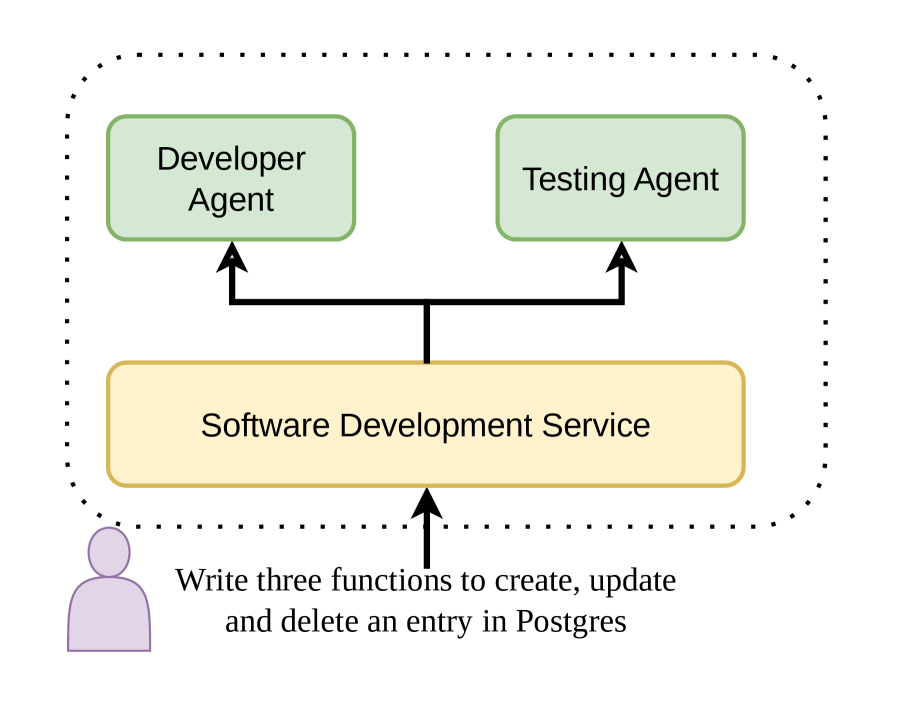

Fig 1: Figure 1 . Agentic Software Developer: The above highlights a software agent workflow where the developer agent is responsible for generating functions and the testing agent generates testing code.

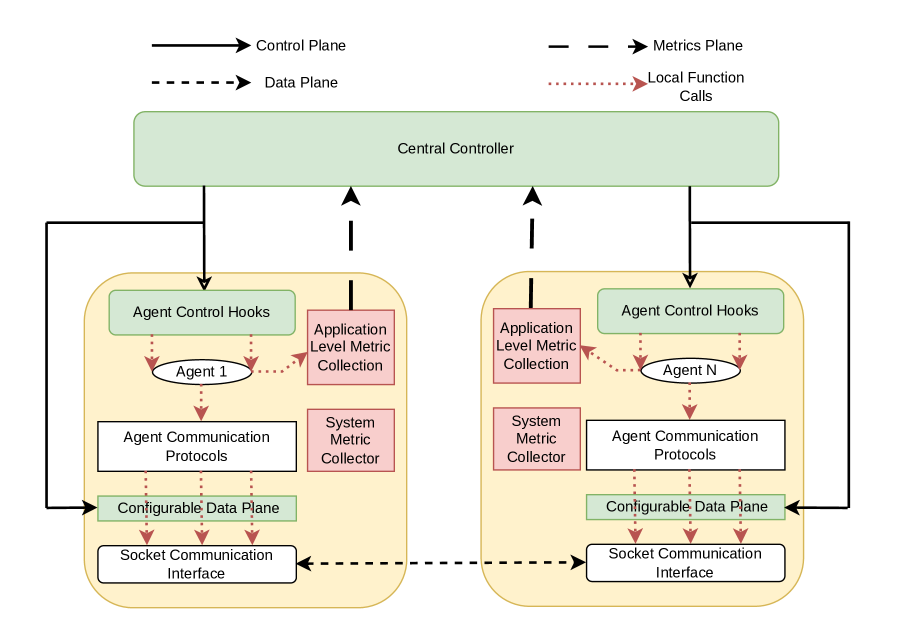

Fig 4: Figure 5 . Our proposal: The control plane orchestrates both data plane and agent/tool actions based on global telemetry.

Fig 5: Figure 6 . Request rate with control: Using control for message communication granularity.

Ready to evaluate your AI agents?

Learn how ReputAgent helps teams build trustworthy AI through systematic evaluation.

Learn MoreYes, But...

Results are from a preliminary prototype and depend on having timely, structured telemetry across heterogeneous runtimes, which can be hard to instrument in practice. Integrating the controller requires a small shim per agent/tool; while minimal, that still adds engineering work and may be limited by third-party services. The paper sketches declarative policy languages and control APIs but leaves open how to resolve conflicts, guarantee correctness, and scale metric collection at production volume. Context Drift

Full Analysis

Modern AI workflows often chain multiple specialized agents (for planning, retrieval, execution, verification). Performance depends not only on model speed, but on how agents communicate: batching many calls can raise throughput, while token-level streaming lowers wait time for interactive tasks. Letting each workflow pick a static strategy upfront forces trade-offs and often hurts performance as load and resource contention change. Replacing that with a software-defined stack gives a central controller visibility into system and application metrics and the ability to change communication granularity, routing, priority, and even agent-specific settings at runtime.

The proposed stack has three parts: a data plane that supports flexible message granularities (token streaming to batched contexts), a metrics plane that supplies low-overhead telemetry, and a control plane that compiles high-level operator intents into runtime policies. Agents/tools register a tiny control API (two functions: set and reset) so the controller can change parameters like batch size or priority without bespoke integrations. The prototype shows up to 3.6× throughput gains by tuning communication granularity alone and another up to 2.3× when the controller also manipulates serving behavior (routing, model selection). The work highlights practical challenges—agent heterogeneity, metric fidelity, policy language design—and positions the approach as a way to get predictable SLAs, better resource efficiency, and simpler operator control over complex multi-agent systems. Guardrails Pattern Tree of Thoughts Pattern

Explore evaluation patternsSee how to apply these findings

Credibility Assessment:

Authors include Jayanth Srinivasa (h~13) and other modest h-index researchers, giving moderate credibility despite arXiv venue.