The Big Picture

Giving AI agents role-based personas can make them ignore explicit incentives and avoid payoff-optimal strategies, so personas can block true strategic reasoning.

ON THIS PAGE

The Evidence

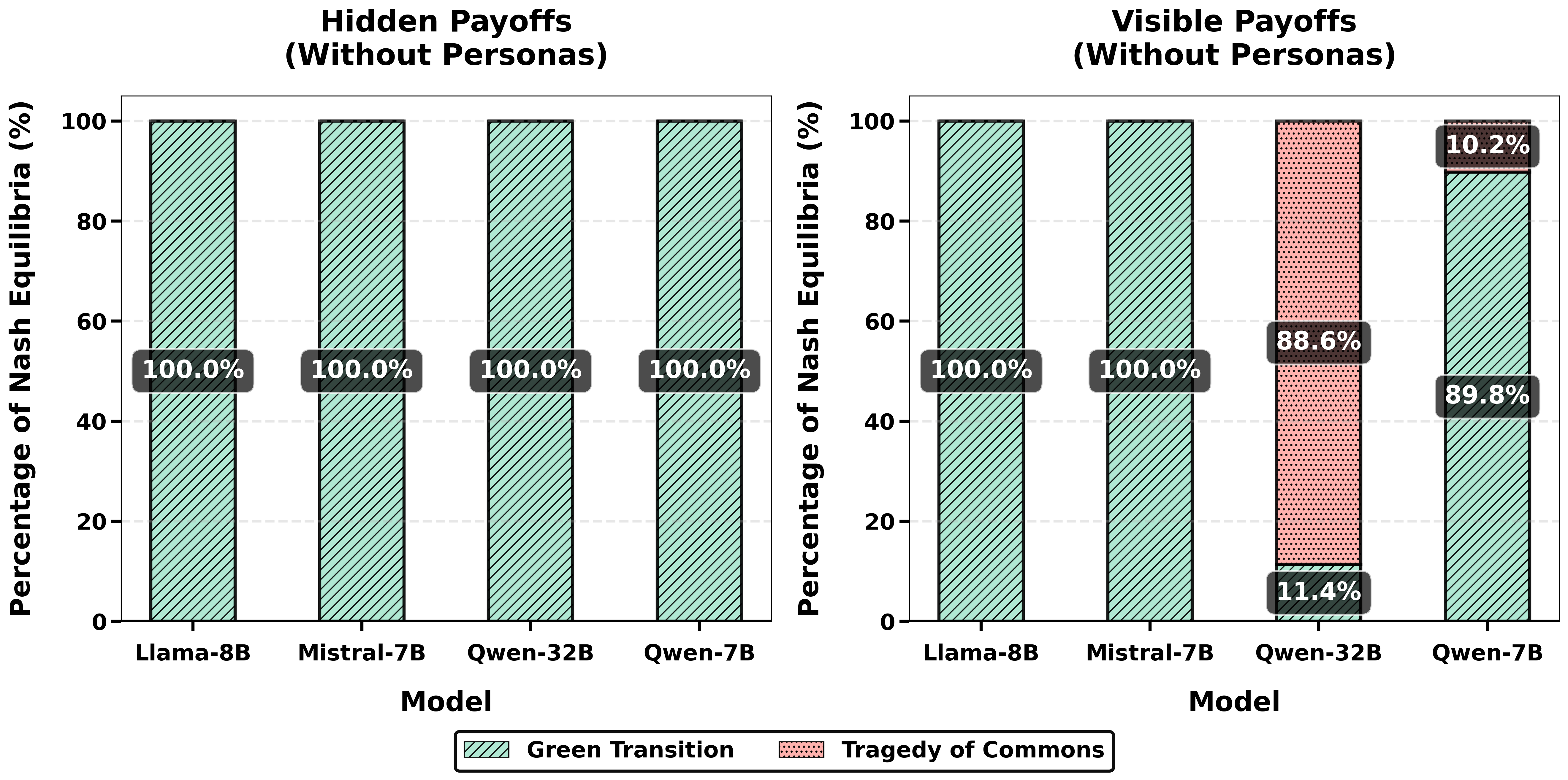

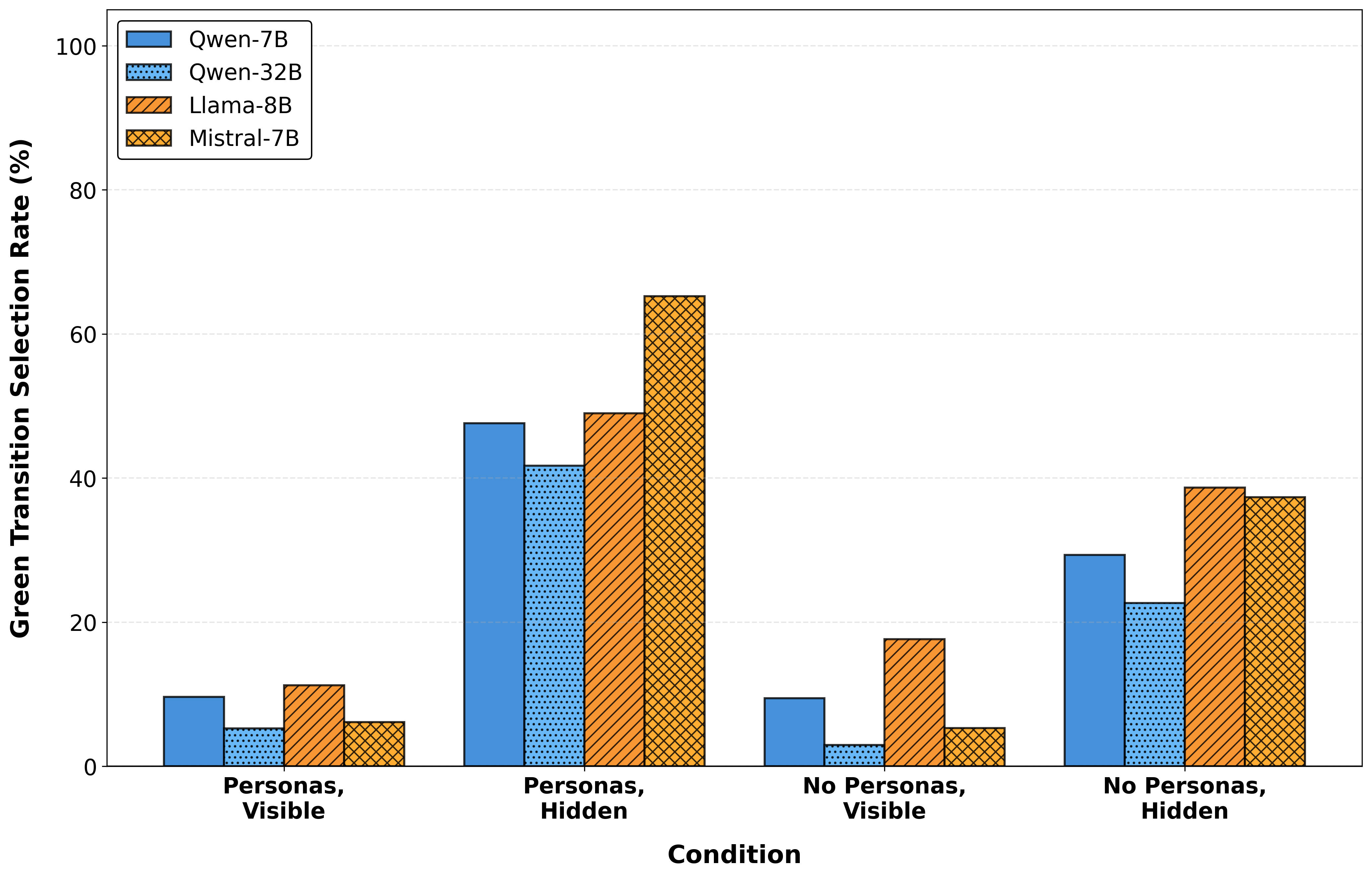

When agents had role personas, they systematically favored persona-consistent, socially preferred actions (the "green" outcome) even when explicit payoffs showed polluting was more profitable. In 12 of 16 experiments, persona-bearing agents reached 0% Nash equilibrium in economic scenarios despite seeing full payoff information. Removing personas and showing payoffs allowed some model families to recover payoff-optimal equilibria, so both persona removal and explicit payoff visibility are needed for strategic reasoning in these settings. Effects varied by model family: some models stayed identity-driven across conditions while others shifted toward payoff-based reasoning when personas were removed. This aligns with Consensus-Based Decision Pattern. The chain of thought reasoning enabled in the setup also echoes considerations from Chain of Thought Pattern.

Data Highlights

112 out of 16 experiments (75%) produced 0% Nash equilibrium in economic scenarios when personas were present, even with explicit payoff matrices.

2With personas, models selected Green Transition actions in 20%–59% of economic scenario runs; Qwen models showed the highest Green rates (40%–59%), while Llama and Mistral were 20%–30%.

3Persona presence drove equilibrium selection to Green Transition in reported analyses (100% Green Transition in some tables); removing personas combined with visible payoffs enabled certain models (notably Qwen variants) to reach much higher Nash rates.

What This Means

Engineers building multi-agent simulations and decision-making tools should care because persona choices can silently change whether agents reason strategically or act according to assigned identities. Technical leaders and evaluators should treat persona design as a governance decision: document persona use, run agent-to-agent evaluations that test for strategic behavior, and pick model architectures that match the intended behavior. For governance framing, see AI Governance. Teams can further apply Supervisor Pattern to structure evaluation and oversight of agent behavior.

Not sure where to start?Get personalized recommendations

Key Figures

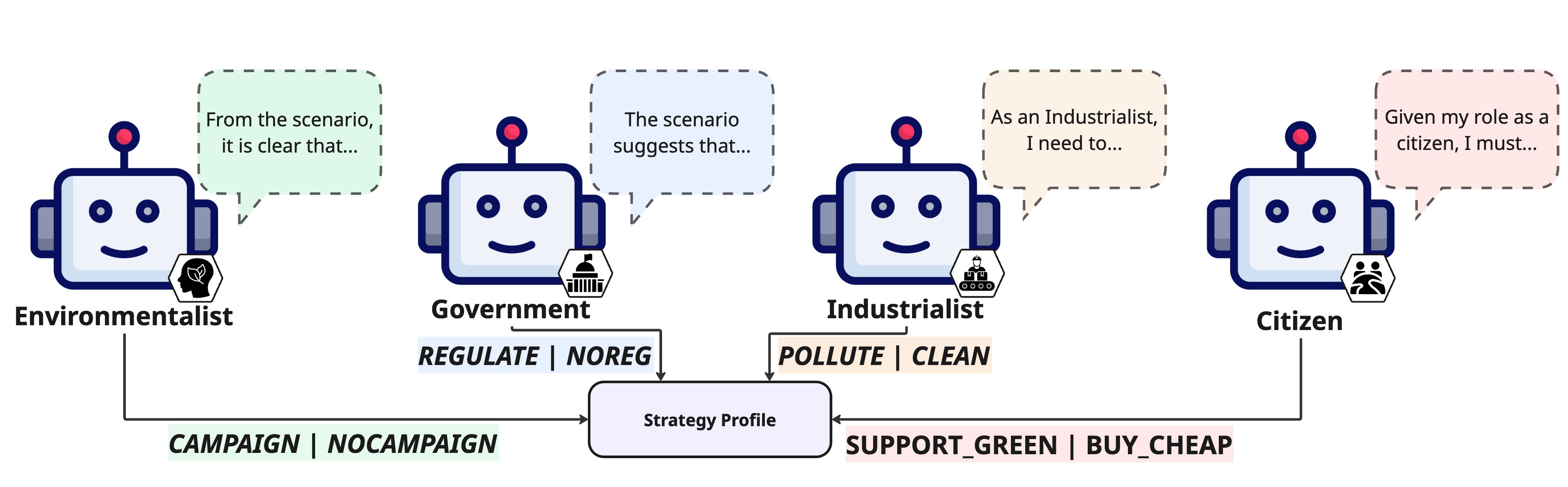

Fig 1: Figure 1 . The diagram shows our multi-agent game structure with chain-of-thought reasoning. Each agent analyzes the scenario and their role identity, then selects one of two binary actions. The arrows from each agent converge to a central Strategy Profile box, representing simultaneous action selection where all four agents choose actions concurrently, and their combined choices form the strategy profile evaluated for Nash equilibrium. The dotted speech bubbles contain each agent’s chain-of-thought reasoning process.

Fig 2: Figure 2 . Economic Scenarios Nash Equilibrium Rates by Model and Condition. This heatmap visualizes Nash equilibrium achievement rates in economic scenarios (where Tragedy of Commons is the payoff-optimal equilibrium) across all four models and four experimental conditions. The color intensity represents the Nash rate percentage, with darker blue indicating higher rates.

Fig 3: Figure 3 . Equilibrium Selection Patterns: Green Transition vs Tragedy of Commons (Without Personas Condition). This figure shows the distribution of Nash equilibria between Green Transition and Tragedy of Commons outcomes across all four models in the no-personas condition.

Fig 4: Figure 4 . Green Transition Action Selection Rates in Economic Scenarios. This grouped bar chart shows the percentage of action profiles where models select Green Transition actions (socially preferred but payoff-suboptimal) in economic scenarios where Tragedy of Commons is the payoff-optimal equilibrium. When personas are present, all models show substantial Green Transition selection rates (20-59%) despite Tragedy being payoff-optimal, demonstrating that role identity bias operates at the action level even when models fail to coordinate on Nash equilibria. Qwen models show the highest Green Transition rates with personas (40-59%), while Llama and Mistral show moderate rates (20-30%). Removing personas substantially reduces Green Transition selection for all models, with rates dropping to near-zero when payoffs are visible and personas are absent, confirming that personas drive the preference for socially aligned actions. The persistence of Green Transition selection with personas, even in conditions where Nash equilibrium rates are zero, indicates that role identity bias affects individual action choices independently of coordination success, revealing a pervasive influence of persona-driven reasoning on strategic decision-making.

Ready to evaluate your AI agents?

Learn how ReputAgent helps teams build trustworthy AI through systematic evaluation.

Learn MoreLimitations

Results come from models sized 7B–32B across three families (Qwen, Llama, Mistral), so behavior may differ for larger or other architectures. Payoffs were shown as full 16-profile matrices, which may be hard for models to process—alternative payoff presentations could change outcomes. The experiments use a specific 4-player environmental game; other game structures, role sets, or domains might produce different persona effects. For safety and robustness considerations, researchers can keep in mind Context Drift as a potential risk when varying scenarios.

Methodology & More

Researchers ran a controlled experiment where four AI agents—Industrialist, Government, Environmental Activist, and Citizen—simultaneously chose between two actions (e.g., pollute vs. clean). The setup creates two focal outcomes: a socially preferred Green Transition and a payoff-optimal Tragedy of the Commons. The team crossed two variables: whether agents were given explicit role personas and whether full payoff matrices were visible, and tested multiple model families with chain-of-thought reasoning enabled. Findings show that role personas act as a strong normative bias. When personas were present, agents overwhelmingly favored persona-aligned ‘‘green’’ actions and often failed to converge to Nash equilibria even when payoffs clearly favored the pollute outcome—12 of 16 persona-bearing runs had 0% Nash equilibrium in economic scenarios. Removing personas and showing payoffs let some models (notably the Qwen family) recover strategic, payoff-maximizing behavior. Practical implications: persona design is not cosmetic—it's a behavioral control with governance consequences. Teams should explicitly test agent-to-agent behavior, document persona choices, experiment with payoff presentation formats, and consider model family when they need true strategic reasoning rather than identity-driven simulation.

Avoid common pitfallsLearn what failures to watch for

Credibility Assessment:

Authors have low h-indexes and no strong affiliation or venue signals; limited credibility.