The Big Picture

Converting local bearing-only sensor readings into a small image and training decentralized policies with a global cohesion reward produces swarm behaviors that gather much faster while keeping groups connected better than classic analytic rules.

ON THIS PAGE

The Evidence

Encoding each agent’s local view as a 75×75 pixel image lets a convolutional network extract spatial patterns from bearing-only sensing and feed a decentralized policy. Training with centralized information but decentralized execution (global reward + local penalties) yields faster convergence and stronger cohesion than the proven analytic gathering rule and prior neural methods in simulation. A critical reward term penalizing loss of neighbors trades off speed vs. safety: too large a penalty freezes agents, too small causes risky, fragmented but fast gathers. Overall, learned policies speed up gathering while largely preserving connectivity under challenging initial layouts. consensus-based decision pattern and guardrails pattern.

Data Highlights

1Training used 300 million environment steps total (150M on 10-agent scenarios, then 150M on 20-agent scenarios).

2Local observations are projected into a 75×75 pixel grid (each neighbor drawn as a 3×3 block); simulation visibility range was 50 units with step size 0.5.

3Ablation on neighbor-loss penalty: penalty = -1 caused agents to stay stationary (overly conservative); penalty = 0 produced fast but lower-quality convergence (connections lost more often).

What This Means

Engineers building decentralized robot swarms (search-and-rescue, environmental monitoring) who need faster, robust gathering without central controllers should pay attention. Technical leaders exploring hybrid evaluation or deployment strategies can use the image-based sensing idea to simplify on-board perception while leveraging centralized training for safety and cohesion. image-based sensing idea to illustrate the concept here.

Not sure where to start?Get personalized recommendations

Key Figures

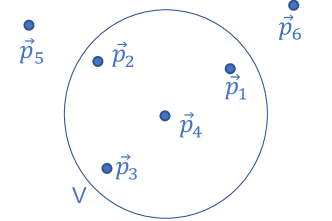

Fig 1: Figure 1: Convergence of 20 agents. Left: local observations of a typical agent, where white pixels indicate detected neighbors within a limited sensing range. Right: global view of the swarm’s convergence trace.

Fig 2: Figure 2: Sensor to Pixels framework.

Fig 3: Figure 3: Preprocessing from local observation to pixel-grid representation.

Fig 4: Figure 4: Actor Critic NN Architecture.

Ready to evaluate your AI agents?

Learn how ReputAgent helps teams build trustworthy AI through systematic evaluation.

Learn MoreKeep in Mind

Training is compute-heavy and sensitive to hyperparameters—the approach required 300M steps and careful reward tuning to get the right balance of speed and connectivity. Learned policies do not provide formal guarantees of connectivity like analytic rules, so worst-case fragmentation remains possible in unseen layouts. Results are demonstrated in simulation with bearing-only, 2D sensing and limited-range visibility; real-world sensors, noise, or obstacles may reduce transferability without further adaptation. context drift.

Methodology & More

Local bearing-only readings are converted into a small image centered on the agent (75×75 pixels), with neighbors rendered as 3×3 blocks. Those images feed a convolutional neural network that produces actions via an actor-critic policy. Training uses centralized information (so the trainer can compute global rewards and stabilize learning) but policies run independently on each agent at execution time. The multi-agent variant of proximal policy optimization was used; training followed a curriculum of 150M steps on 10-agent scenarios and 150M on 20-agent scenarios, with global and local rewards designed to encourage both rapid gathering and preservation of neighbor links. centralized training and adaptive system. Learned policies converged faster and produced tighter gatherings than a classical analytic gathering rule and an earlier neural baseline, at the cost of losing formal connectivity guarantees. A key design knob was a penalty for losing a neighbor: making it too large stopped agents from moving, while removing it led to quick but riskier convergence. The approach is promising for time-critical swarm tasks where speed and practical cohesion matter, but it requires heavy training, careful reward tuning, and further work to validate transfer to noisy, obstacle-filled real environments or to provide formal safety guarantees. A practical next step is an adaptive system that chooses between analytic and learned controllers during deployment depending on risk tolerance. LLM-as-Judge.

Avoid common pitfallsLearn what failures to watch for

Credibility Assessment:

Includes at least one recognized researcher (Alfred M. Bruckstein is a known academic) and others with some track record, so moderate credibility.