Key Takeaway

Agent Mallard simulates candidate clearances through a realistic digital twin, finds coordinated, sector-wide conflict-free plans, and only issues commands that remain safe under modeled uncertainties.

ON THIS PAGE

Core Insights

Forward simulation combined with a lane-based airspace design turns complex lateral control into a discrete choice problem that can be verified before action. The agent evaluates baseline efficient plans, detects conflicts up to an hour ahead under uncertain conditions (wind, pilot delay, communication loss), and searches for coordinated fixes that keep the whole sector safe. Human controller walkthroughs found the agent’s reasoning familiar and its manoeuvres operationally plausible. The system is still early-stage and requires full curriculum-based testing before deployment. lane-based airspace design and forward simulation have implications for verifiability and safety.

Explore evaluation patternsSee how to apply these findings

Data Highlights

1Forward-simulates the next 60 minutes of traffic under multiple scenarios, including wind, delayed pilot responses and a 15-minute communication loss.

2Operational evaluation loop repeats every few seconds, enabling continuous re-evaluation and adaptation as conditions change.

3Validation uses 30-minute training scenarios from the Machine Basic Training curriculum to progressively increase tactical complexity.

What This Means

Air traffic system engineers and technical leaders evaluating AI for decision support should care because the design shows a practical path to verifiable, controller-friendly automation. Researchers and evaluators working on agent reliability and continuous agent evaluation can use Mallard’s simulation-first, lane-based approach as a reproducible pattern for safe tactical automation. A focus on continuous agent evaluation can help organizations monitor and improve ongoing performance.

Key Figures

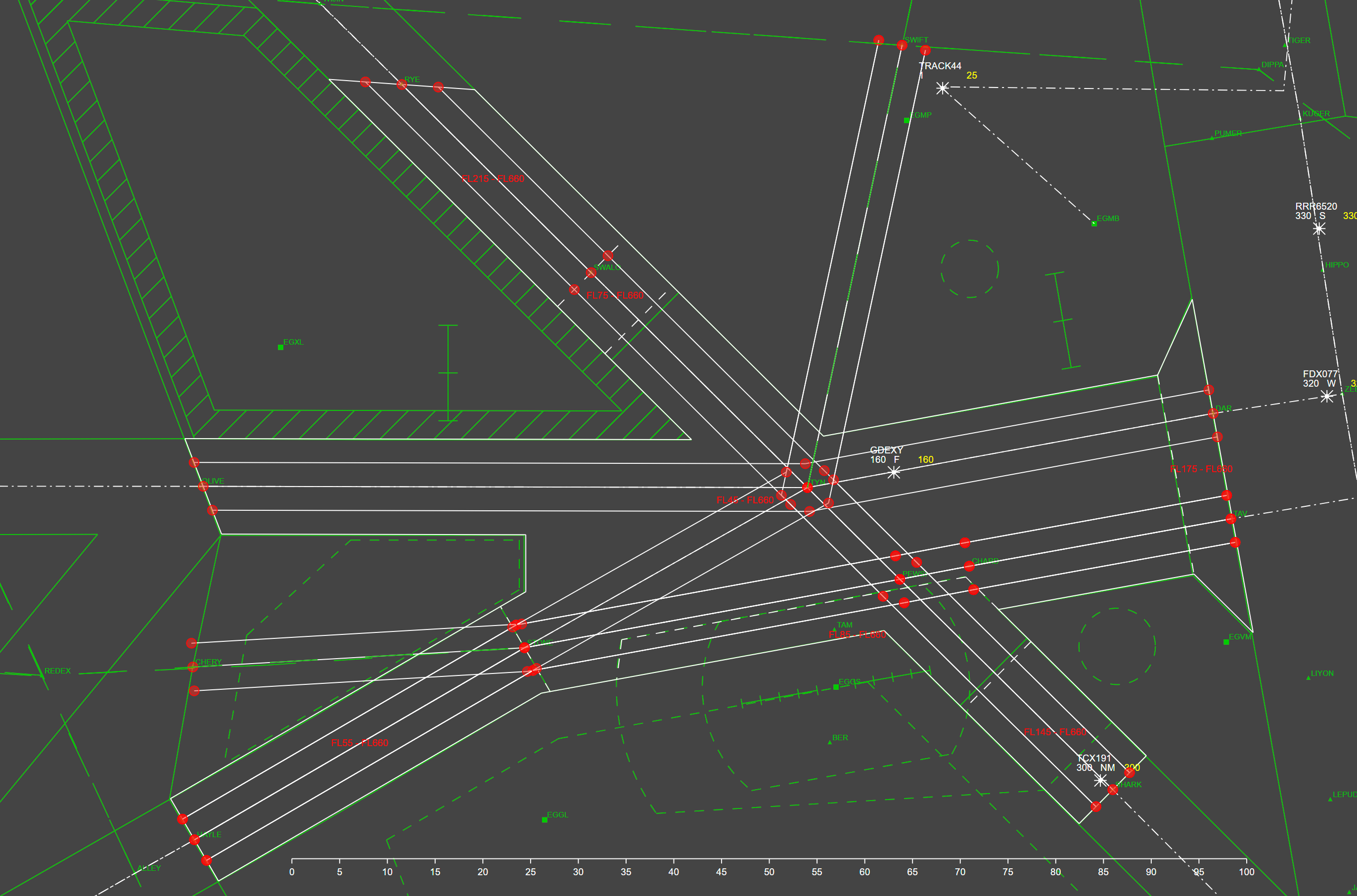

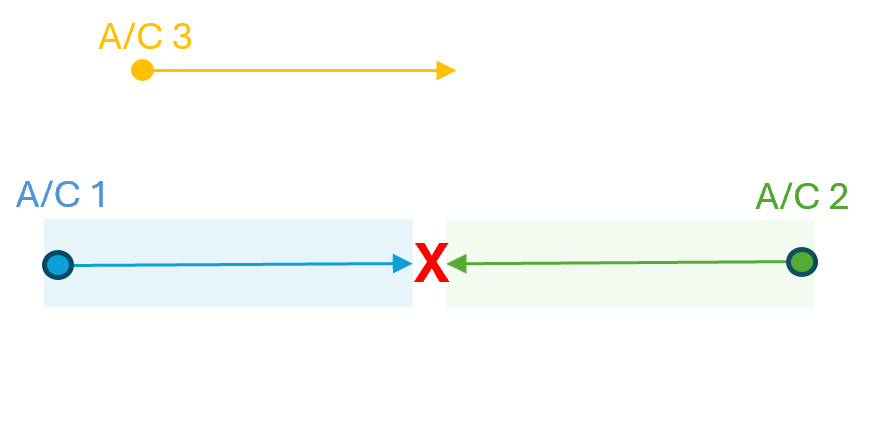

Fig 1: Figure 1 : Agent Mallard’s operational flowchart. Blue: initialisation (airspace state received, plans generated if needed). Yellow: evaluation (plan simulated on the digital twin). Red: deconfliction (if unsafe, Backtracking search looks for a safe alternative; see Sec. 3.5.4 ). Green: execution (if safe, conditions checked and actions issued). If conditions are never met the aircraft would miss its coordination, be deemed unsafe, and planned again.

Fig 2: Figure 2 : A set of deconflicted lanes for a training sector. The Right and Left lanes for each route are geometrically deconflicted from each other.

Fig 3: Figure 3 : Example visualisation of Agent Mallard’s initial flight plans within the BluebirdDT digital twin interface, showing intended routes based on systemised airspace principles.

Fig 5: (a)

Ready to evaluate your AI agents?

Learn how ReputAgent helps teams build trustworthy AI through systematic evaluation.

Learn MoreConsiderations

Mallard assumes systemised airspace where aircraft follow GPS-guided lanes and meet RNAV1 performance; it is not designed for free-route airspace or extreme weather-driven re-routing. Current validation is preliminary: expert walkthroughs and limited digital twin tests, not yet a full pass of the formal training curriculum. Quantitative metrics (separation assurance rates, fuel/time efficiency, computational performance) and formal expert comparison to human solutions remain to be collected. For potential failure modes, consider Context Drift as a risk to long-term reliability.

Methodology & More

Agent Mallard continuously generates efficient baseline plans for each flight (climb, follow assigned lane, descend) and then forward-simulates the next hour of operations in a high-fidelity digital twin that includes wind, pilot response variability, and a modeled 15-minute communication-loss scenario. Whenever predicted loss of separation appears, the agent treats the issue as part of a sector-wide puzzle: it selects complementary clearances from a ranked strategy library (lane offsets, speed changes, climb/descent pairs), injects them into the flight plans, and re-simulates the entire sector to verify no new conflicts are created. The search uses depth-limited backtracking with pruning to find a coordinated, conflict-free solution while changing only the plan segments that cause trouble. depth-limited backtracking is a mechanism that supports structured exploration and safe decision-making. The lane-based airspace design makes lateral control a discrete selection problem (which lane to use), which dramatically simplifies safety verification and keeps reasoning interpretable for human operators. Clearances are tied to geometric conditions (state-based triggers) rather than fixed times, making execution robust to timing variation. Early walkthroughs with active controllers and instructors reported that Mallard’s decision patterns align with familiar tactical reasoning and that proposed manoeuvres are sensible for the tested scenarios. Next steps are systematic validation across the full Machine Basic Training syllabus, collection of quantitative performance metrics, and structured expert comparison to human controller solutions before any operational deployment can be considered. depth-limited backtracking and Guardrails Pattern provide complementary perspectives on safe, auditable automation.

Need expert guidance?We can help implement this

Credibility Assessment:

University of Exeter affiliation and authors with modest h-index values lend moderate credibility even though it's an arXiv preprint.